上一篇 讲解了最为流行的微服务监控工具 Prometheus,并且使用开发环境演示了 Prometheus 自身的监控。在微服务监控中,除了 Prometheus 自身的监控外,Kubernetes 集群的监控也是非常重要的,我们需要时时刻刻了解集群的运行状态。

对于 Kubernetes 集群一般需要监控以下几个方面:

- 集群节点:节点

cpu、memory、disk、load等指标 - 组件状态:

kube-scheduler、kube-controller-manager、kubedns/coredns等组件运行状态 - 编排:

Deployment的状态、资源请求、调度和 API 延迟等数据指标

集群节点

node_exporter

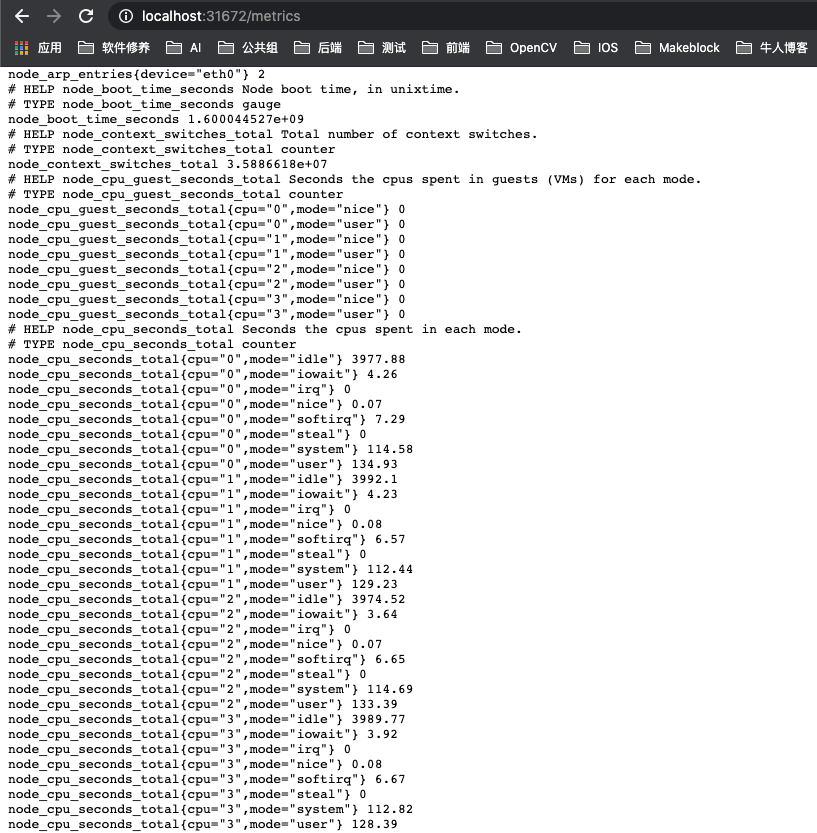

监控集群节点这里推荐使用 Prometheus 官方的 node_exporter。node_exporter 抓取用于采集服务器节点的各种运行指标,比如 arp、conntrack、cpu、diskstats、filesystem、ipvs、loadavg、meminfo,netstat 等,详见enabled-by-default。

从 node_exporter 的名称不难推测,它是上一篇所讲的 exporter。exporter 允许用户在不修改原有程序的情况下暴露指标让任务来抓,这一点有点类似于 Istio 的 Sidecar。由于 node_exporter 是对集群节点的监控,因此,也不难推测可以使用 DaemonSet 来部署 node_exporter。这样每一个节点都会自动运行一个这样的 Pod,如果集群中的节点数改变,也会进行自动扩展。

部署

下面是 node_exporter 的部署文件。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

# node_exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: observability

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: observability

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

部署

1

2

3

$ kubectl apply -f node-exporter.yaml

daemonset.apps/node-exporter created

service/node-exporter created

访问

获取服务访问入口

1

2

3

4

$ kubectl get service -n observability

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-exporter NodePort 10.111.82.119 <none> 9100:31672/TCP 117s

prometheus NodePort 10.98.133.13 <none> 9090:31033/TCP 2d22h

访问服务

更新配置

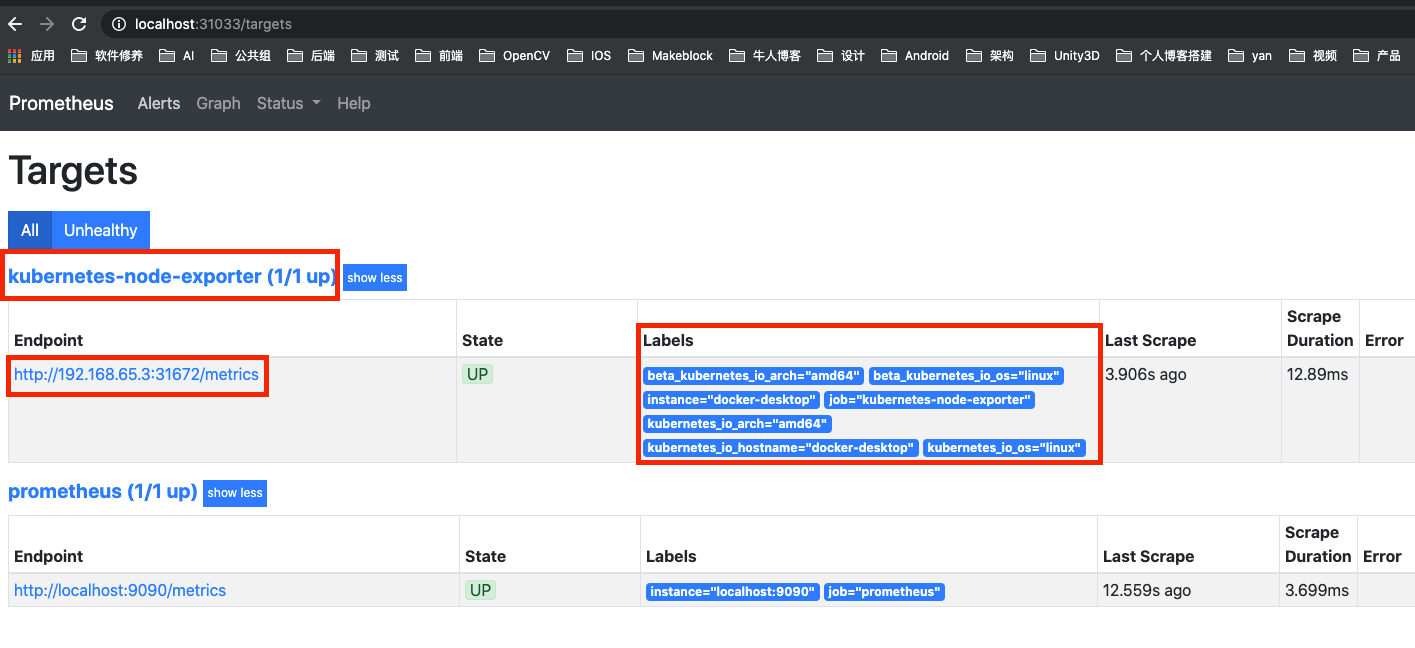

向 Prometheus 配置文件增加 kubernetes-node-exporter job,实现对 node_exporter 暴露指标的抓取。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# prometheus.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: observability

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

rule_files:

# - "first.rules"

# - "second.rules"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-node-exporter'

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_role]

action: replace

target_label: kubernetes_role

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:31672'

target_label: __address__

这里设置 kubernetes_sd_configs 的模式为 node,Prometheus 会自动从 Kubernetes 中发现所有的 node 节点并作为当前 job 监控的目标实例,发现的节点 /metrics 接口是默认的 kubelet 的 HTTP 接口。

tls_config 指定用于访问 Kubernetes API 的 ca 以及 token 文件路径,ca 证书和 token 文件都是 Pod 启动后集群自动注入到 Pod 中的文件。

由于 prometheus 发现 node 模式的服务的时候,默认访问的端口是 10250,但是 node_exporter 服务指定的 nodePort 是 31672。因此,这里需要用 Prometheus relabel_configs 的 replace 。relabel 可以在 Prometheus 采集数据之前,通过 Target 实例的 Metadata 信息,动态重新写入 Label 的值。除此之外,我们还能根据 Target 实例的 Metadata 信息选择是否采集或者忽略该 Target 实例。比如这里匹配 __address__ 这个 Label 标签,然后使用正则表达式替换掉其中的端口为 node_exporter 的 31672。

这里还增加了一个 labelmap 的 action,将 Kubernetes 的 Label 标签添加为 Prometheus 的指标标签,避免采集的指标数据 Label 标签就只有一个节点的 hostname,为监控分组分类查询带来便利。

注:

kubernetes_sd_configs可用的标签如下:

- __meta_kubernetes_node_name:节点对象的名称

- __meta_kubernetes_nodelabel:节点对象中的每个标签

- __meta_kubernetes_nodeannotation:来自节点对象的每个注释

- __meta_kubernetes_nodeaddress:每个节点地址类型的第一个地址(如果存在)

更多详见官方文档。

更新 Prometheus 配置文件

1

2

$ kubectl apply -f prometheus.yaml

configmap/prometheus-config configured

reload 使配置生效

1

curl -X POST localhost:31033/-/reload

注:

31033是开发环境中 Prometheus9090映射出来的端口,可以通过kubectl get service -n observability查询获得。

验证

查看指标

从上图可以看出,node_exporter、Endpoint 端口、Labels 均按配置文件正确配置。

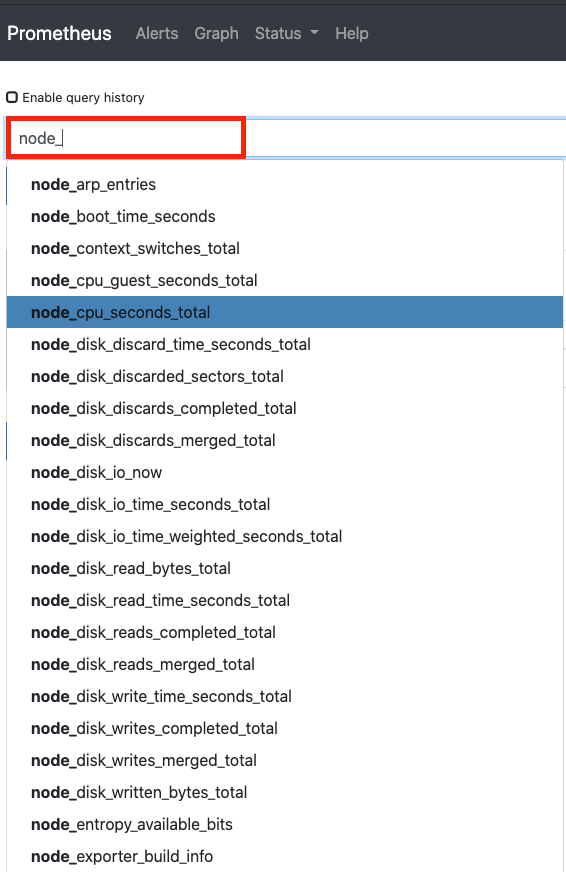

查看 node_ 可以看出,已经增加了节点相关的指标数据。

编排

kube-state-metrics

监控集群编排状态这里推荐使用 Kubernetes 官方的 kube-state-metrics。kube-state-metrics 通过监听 API Server 生成有关资源对象的状态指标,比如 Deployment、Node、Pod,需要注意的是 kube-state-metrics 只是简单提供一个 metrics 数据,并不会存储这些指标数据,所以我们可以使用 Prometheus 来抓取这些数据然后存储。

部署

下面是 kube-state-metrics 的部署文件。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

# cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- list

- watch

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# cluster-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

1

2

3

4

5

6

7

8

9

# service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: kube-system

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

spec:

containers:

- image: quay.io/coreos/kube-state-metrics:v1.9.7

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: kube-system

annotations:

prometheus.io/scrape: "true"

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

1

2

3

4

5

6

7

8

9

10

$ kubectl apply -f cluster-role-binding.yaml

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

$ kubectl apply -f cluster-role.yaml

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

$ kubectl apply -f service-account.yaml

serviceaccount/kube-state-metrics created

$ kubectl apply -f deployment.yaml

deployment.apps/kube-state-metrics created

$ kubectl apply -f service.yaml

service/kube-state-metrics created

查看 kube-state-metrics 部署情况

1

2

3

4

5

6

7

8

9

$ kubectl get deployments -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 2/2 2 2 4d21h

kube-state-metrics 1/1 1 1 57s

$ kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 4d21h

kube-state-metrics ClusterIP 10.99.3.147 <none> 8080/TCP,8081/TCP 117s

更新配置

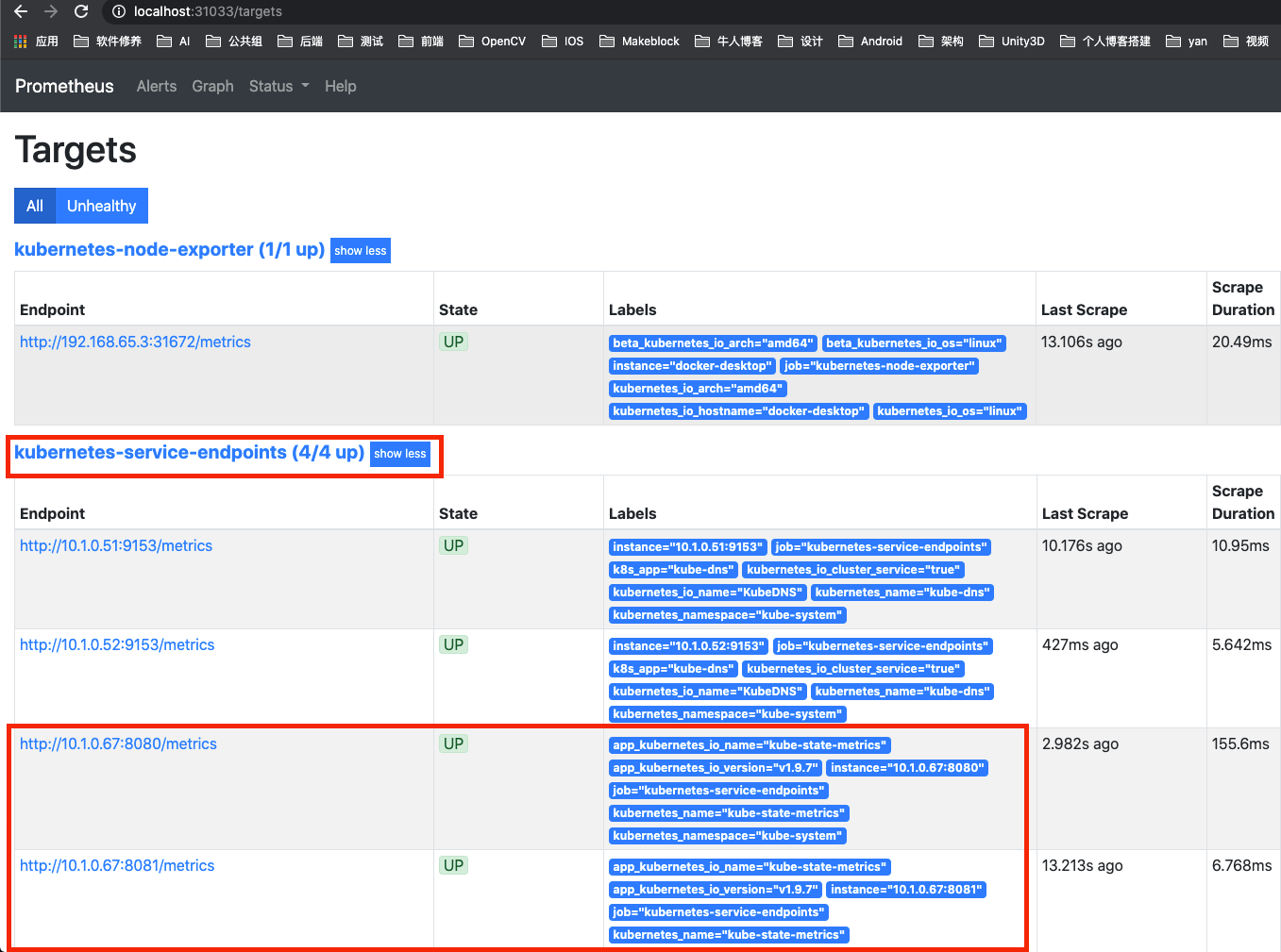

向 Prometheus 配置文件增加 kubernetes-service-endpoints job。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: observability

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

rule_files:

# - "first.rules"

# - "second.rules"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-node-exporter'

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [__meta_kubernetes_role]

action: replace

target_label: kubernetes_role

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:31672'

target_label: __address__

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: "true"

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

kubernetes-service-endpoints 在 relabel_configs 区域做了大量的配置,特别是第一个 keep __meta_kubernetes_service_annotation_prometheus_io_scrape 为 true 的才保留下来,这就是说要想自动发现集群中的 Service,就需要我们在 Service 的 annotation 区域添加 prometheus.io/scrape=true 的声明。

而我们在 kube-state-metrics 的 service.yaml 中添加了 prometheus.io/scrape: 'true' 的 annotation 所以,就可以自动发现了。

更新 Prometheus 配置文件

1

2

$ kubectl apply -f prometheus.yaml

configmap/prometheus-config configured

reload 使配置生效

1

curl -X POST localhost:31033/-/reload

验证

查看指标

从上图可以看出,kube-state-metrics 已按配置文件正确配置。

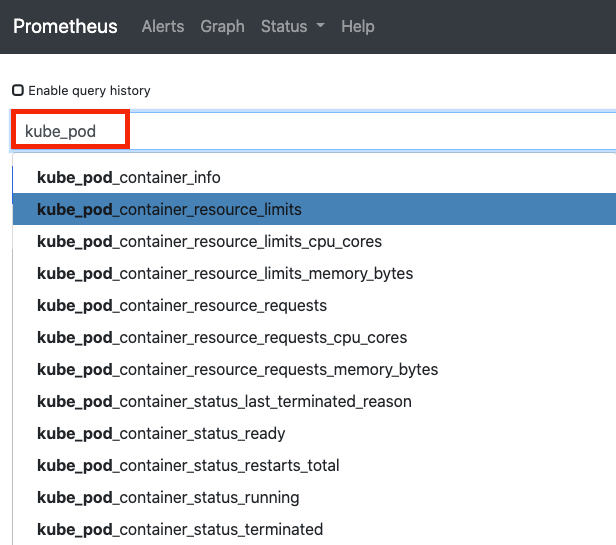

查看 kube_pod 可以看出,已经增加了kube-state-metrics 相关的指标数据。

注:kube-state-metrics 暴露了许多指标,详见 Exposed Metrics。

其他监控

其他监控原理类似,并且基本都是 Kubernetes 内置的,这里便不再赘述,下面主要列举配置文件。

apiservers

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# prometheus.yaml

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- namespaces:

names:

- default

role: endpoints

relabel_configs:

- action: keep

regex: kubernetes;https

source_labels:

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

kubernetes-cadvisor

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# prometheus.yaml

- job_name: kubernetes-cadvisor

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- replacement: kubernetes.default.svc:443

target_label: __address__

- regex: (.+)

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

source_labels:

- __meta_kubernetes_node_name

target_label: __metrics_path__

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

kubernetes-nodes

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# prometheus.yaml

- job_name: kubernetes-nodes

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- replacement: kubernetes.default.svc:443

target_label: __address__

- regex: (.+)

replacement: /api/v1/nodes/${1}/proxy/metrics

source_labels:

- __meta_kubernetes_node_name

target_label: __metrics_path__

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

小结

本篇为大家介绍了使用 Prometheus 监控 Kubernetes 集群。下一篇将为大家带来,使用 Exporter 监控 Kubernetes 集群应用。

注:本章内容涉及的 yaml 文件可前往 https://github.com/MakeOptim/service-mesh/prometheus 获取。

微服务监控 - Prometheus

微服务监控 - Prometheus