前言

上一篇笔者使用如何阅读深度学习论文的方法阅读了 AlexNet。为了加深理解,本文带大家使用 TensorFlow 2 Keras 实现 AlexNet CNN 网络。

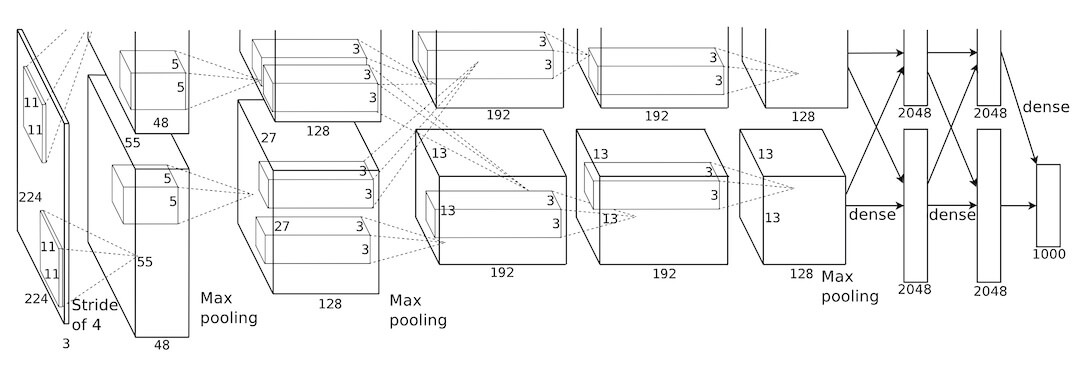

网络结构

从上一篇可以得到 AlexNet 的各层网络结构如下:

- 第

1卷积层使用96个核对224 × 224 × 3的输入图像进行滤波,核大小为11 × 11 × 3,步长是4个像素。然后,使用归一化和池化操作。 - 第

2卷积层使用256个核进行滤波,核大小为5 × 5 × 48。然后,使用归一化和池化操作。 - 第

3,4,5卷积层互相连接,中间没有接入池化层或归一化层。 - 第

3卷积层有384个核,核大小为3 × 3 × 256。 - 第

4卷积层有384个核,核大小为3 × 3 × 192。 - 第

5卷积层有256个核,核大小为3 × 3 × 192。然后,使用池化操作。 - 第

6层先Flatten之后接入4096个神经元的全连接层。 - 第

7层是4096个神经元的全连接层。 - 第

8层是1000个神经元的全连接层,作为输出层。

实现

Sequential

使用 Keras API 可以很快“翻译”出网络结构。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

model = keras.models.Sequential([

# layer 1

layers.Conv2D(

filters=96,

kernel_size=(11, 11),

strides=(4, 4),

activation=keras.activations.relu,

padding='valid',

input_shape=(227, 227, 3)),

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

# layer 2

layers.Conv2D(

filters=256,

kernel_size=(5, 5),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

# layer 3

layers.Conv2D(

filters=384,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

# layer 4

layers.Conv2D(

filters=384,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

# layer 5

layers.Conv2D(

filters=256,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

# layer 6

layers.Flatten(),

layers.Dense(units=4096, activation=keras.activations.relu),

layers.Dropout(rate=0.5),

# layer 7

layers.Dense(units=4096, activation=keras.activations.relu),

layers.Dropout(rate=0.5),

# layer 8

layers.Dense(units=1000, activation=keras.activations.softmax)

])

model.summary()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 55, 55, 96) 34944

_________________________________________________________________

batch_normalization (BatchNo (None, 55, 55, 96) 384

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 27, 27, 96) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 27, 27, 256) 614656

_________________________________________________________________

batch_normalization_1 (Batch (None, 27, 27, 256) 1024

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 13, 13, 256) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 13, 13, 384) 885120

_________________________________________________________________

batch_normalization_2 (Batch (None, 13, 13, 384) 1536

_________________________________________________________________

conv2d_3 (Conv2D) (None, 13, 13, 384) 1327488

_________________________________________________________________

batch_normalization_3 (Batch (None, 13, 13, 384) 1536

_________________________________________________________________

conv2d_4 (Conv2D) (None, 13, 13, 256) 884992

_________________________________________________________________

batch_normalization_4 (Batch (None, 13, 13, 256) 1024

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 6, 6, 256) 0

_________________________________________________________________

flatten (Flatten) (None, 9216) 0

_________________________________________________________________

dense (Dense) (None, 4096) 37752832

_________________________________________________________________

dropout (Dropout) (None, 4096) 0

_________________________________________________________________

dense_1 (Dense) (None, 4096) 16781312

_________________________________________________________________

dropout_1 (Dropout) (None, 4096) 0

_________________________________________________________________

dense_2 (Dense) (None, 1000) 4097000

=================================================================

Total params: 62,383,848

Trainable params: 62,381,096

Non-trainable params: 2,752

_________________________________________________________________

注:

这里使用

BatchNormalization代替了原论文中的Local Response Normalization,关于两者的区别详见 https://towardsdatascience.com/difference-between-local-response-normalization-and-batch-normalization-272308c034ac。当然,如果大家还是想用Local Response Normalization的话,可以使用layers.Lambda(tf.nn.local_response_normalization)。AlexNet 使用重叠池化 并且

s=2,z=3,因此,池化层为MaxPool2D(pool_size=(3, 3), strides=(2, 2))

Subclassing

除了使用 Sequential 实现模型外,还可以使用子类的形式,详见 Making new Layers and Models via subclassing。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

class AlexNet(keras.Model):

def __init__(self, num_classes, input_shape=(227, 227, 3)):

super(AlexNet, self).__init__()

self.input_layer = layers.Conv2D(

filters=96,

kernel_size=(11, 11),

strides=(4, 4),

activation=keras.activations.relu,

padding='valid',

input_shape=input_shape)

self.middle_layers = [

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

layers.Conv2D(

filters=256,

kernel_size=(5, 5),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

layers.Conv2D(

filters=384,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.Conv2D(

filters=384,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.Conv2D(

filters=256,

kernel_size=(3, 3),

strides=(1, 1),

activation=keras.activations.relu,

padding='same'

),

layers.BatchNormalization(),

layers.MaxPool2D(pool_size=(3, 3), strides=(2, 2)),

layers.Flatten(),

layers.Dense(units=4096, activation=keras.activations.relu),

layers.Dropout(rate=0.5),

layers.Dense(units=4096, activation=keras.activations.relu),

layers.Dropout(rate=0.5),

]

self.out_layer = layers.Dense(

units=num_classes, activation=keras.activations.softmax)

def call(self, inputs):

x = self.input_layer(inputs)

for layer in self.middle_layers:

x = layer(x)

probs = self.out_layer(x)

return probs

model = AlexNet(1000)

model.build((None, 227, 227, 3))

model.summary()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

Model: "alex_net"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_5 (Conv2D) multiple 34944

_________________________________________________________________

batch_normalization_5 (Batch multiple 384

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 multiple 0

_________________________________________________________________

conv2d_6 (Conv2D) multiple 614656

_________________________________________________________________

batch_normalization_6 (Batch multiple 1024

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 multiple 0

_________________________________________________________________

conv2d_7 (Conv2D) multiple 885120

_________________________________________________________________

batch_normalization_7 (Batch multiple 1536

_________________________________________________________________

conv2d_8 (Conv2D) multiple 1327488

_________________________________________________________________

batch_normalization_8 (Batch multiple 1536

_________________________________________________________________

conv2d_9 (Conv2D) multiple 884992

_________________________________________________________________

batch_normalization_9 (Batch multiple 1024

_________________________________________________________________

max_pooling2d_5 (MaxPooling2 multiple 0

_________________________________________________________________

flatten_1 (Flatten) multiple 0

_________________________________________________________________

dense_3 (Dense) multiple 37752832

_________________________________________________________________

dropout_2 (Dropout) multiple 0

_________________________________________________________________

dense_4 (Dense) multiple 16781312

_________________________________________________________________

dropout_3 (Dropout) multiple 0

_________________________________________________________________

dense_5 (Dense) multiple 4097000

=================================================================

Total params: 62,383,848

Trainable params: 62,381,096

Non-trainable params: 2,752

_________________________________________________________________

注:自定义 Model,在 summary 之前需要 build,否则会报如下错误:

ValueError: This model has not yet been built. Build the model first by calling

build()or callingfit()with some data, or specify aninput_shapeargument in the first layer(s) for automatic build.

Demo

在 AlexNet 论文中,不但有网络结构,还有数据集、数据增强等细节。为了帮助大家更好地理解 AlexNet,笔者在TensorFlow 案例的基础上替换成自定义的 AlexNet 模型进行训练,详见 https://github.com/CatchZeng/YiAI-examples/blob/master/papers/AlexNet/AlexNet.ipynb。

小结

实践出真知,从阅读到实践,是一个提升的过程。在实践中,不但可以了解到实现的细节,而且还能熟悉 TensorFlow 的生态。强烈推荐大家,多看论文,并实践。

Istio FAQ - Get client real IP

Istio FAQ - Get client real IP