注:更新于 2021-01-04

现有问题

在 EFK 日志收集 篇中,我们讲解了如何利用 EFK 收集 Kubernetes 集群日志。但是,还存在如下问题。

Elasticsearch以单节点的形式部署,不能满足生产环境的要求Fluentd版本老旧- 日志没有自动清理,容易将磁盘撑爆

本篇文章,将讲解如何部署高可用的 EFK 日志收集。

ECK

可以利用 ECK(Elastic Cloud on Kubernetes) 来解决 Elasticsearch 的单点故障问题。

安装 Operator

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

❯ kubectl apply -f https://download.elastic.co/downloads/eck/1.3.0/all-in-one.yaml

namespace/elastic-system created

serviceaccount/elastic-operator created

secret/elastic-webhook-server-cert created

configmap/elastic-operator created

customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/beats.beat.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/enterprisesearches.enterprisesearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created

clusterrole.rbac.authorization.k8s.io/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator-view created

clusterrole.rbac.authorization.k8s.io/elastic-operator-edit created

clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created

service/elastic-webhook-server created

statefulset.apps/elastic-operator created

validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created

安装成功后,会自动创建一个 elastic-system 的 namespace 以及一个 operator 的 Pod:

1

2

3

4

5

6

7

8

9

❯ kubectl get all -n elastic-system

NAME READY STATUS RESTARTS AGE

pod/elastic-operator-0 1/1 Running 0 53s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-webhook-server ClusterIP 10.0.73.219 <none> 443/TCP 55s

NAME READY AGE

statefulset.apps/elastic-operator 1/1 57s

部署 ECK

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

# elastic.yaml

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: elastic

namespace: elastic-system

spec:

version: 7.10.0

nodeSets:

- name: default

count: 3

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: infrastructure-premium-retain

podTemplate:

spec:

# https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-virtual-memory.html

initContainers:

- name: sysctl

securityContext:

privileged: true

command: ["sh", "-c", "sysctl -w vm.max_map_count=262144"]

---

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: kibana

namespace: elastic-system

spec:

version: 7.10.0

count: 1

elasticsearchRef:

name: elastic

上面的 elastic.yaml 表示

- 部署一个 3 节点的

Elasticsearch,使用infrastructure-premium-retain这个storageClass作为存储类型,每个节点 20Gi 存储空间 - 部署一个 1 节点的

Kibana

注:详细的参数设置,参见 https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-elasticsearch-specification.html。大家可以根据自己集群的情况适当调整。

执行以下部署命令

1

2

❯ kubectl apply -f elastic.yaml

elasticsearch.elasticsearch.k8s.elastic.co/elastic created

部署完毕后,可查看 elastic-system 命名空间下已经部署了 Elasticsearch 和 Kibana

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

❯ kubectl get all -n elastic-system

NAME READY STATUS RESTARTS AGE

pod/elastic-es-default-0 1/1 Running 0 10d

pod/elastic-es-default-1 1/1 Running 0 10d

pod/elastic-es-default-2 1/1 Running 0 10d

pod/elastic-operator-0 1/1 Running 1 10d

pod/kibana-kb-5bcd9f45dc-hzc9s 1/1 Running 0 10d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-es-default ClusterIP None <none> 9200/TCP 10d

service/elastic-es-http ClusterIP 172.23.4.246 <none> 9200/TCP 10d

service/elastic-es-transport ClusterIP None <none> 9300/TCP 10d

service/elastic-webhook-server ClusterIP 172.23.8.16 <none> 443/TCP 10d

service/kibana-kb-http ClusterIP 172.23.7.101 <none> 5601/TCP 10d

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kibana-kb 1/1 1 1 10d

NAME DESIRED CURRENT READY AGE

replicaset.apps/kibana-kb-5bcd9f45dc 1 1 1 10d

NAME READY AGE

statefulset.apps/elastic-es-default 3/3 10d

statefulset.apps/elastic-operator 1/1 10d

访问 Kibana

获取 secret

1

2

❯ kubectl get secret elastic-es-elastic-user -n elastic-system -o=jsonpath='{.data.elastic}' | base64 --decode; echo

895mwewR9atxxxxAKgE4uLh2

port-forward

1

❯ open https://localhost:5601 && kubectl port-forward service/kibana-kb-http -n elastic-system 5601

使用用户名 elastic 和上面获取的 secret 登录。

Fluentd

Fluentd 使用官网最新的版本部署。

Fluent 在 github 上维护了 fluentd-kubernetes-daemonset 项目,可以供我们参考。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

# fluentd-es-ds.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: elastic-system

labels:

app: fluentd-es

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

app: fluentd-es

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

app: fluentd-es

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: elastic-system

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: elastic-system

labels:

app: fluentd-es

spec:

selector:

matchLabels:

app: fluentd-es

template:

metadata:

labels:

app: fluentd-es

spec:

serviceAccount: fluentd-es

serviceAccountName: fluentd-es

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd-es

image: fluent/fluentd-kubernetes-daemonset:v1.11.5-debian-elasticsearch7-1.1

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: elastic-es-http

# default user

- name: FLUENT_ELASTICSEARCH_USER

value: elastic

# is already present from the elasticsearch deployment

- name: FLUENT_ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-es-elastic-user

key: elastic

# elasticsearch standard port

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

# der elastic operator ist https standard

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "https"

# dont need systemd logs for now

- name: FLUENTD_SYSTEMD_CONF

value: disable

# da certs self signt sind muss verify disabled werden

- name: FLUENT_ELASTICSEARCH_SSL_VERIFY

value: "false"

# to avoid issue https://github.com/uken/fluent-plugin-elasticsearch/issues/525

- name: FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS

value: "false"

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /fluentd/etc

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-es-config

与之前部署不一样的地方

-

镜像换成

fluent/fluentd-kubernetes-daemonset维护的镜像 -

增加了

Elasticsearch相关的一些环境变量,如FLUENT_ELASTICSEARCH_HOST、FLUENT_ELASTICSEARCH_USER等。这些值对应着通过 ECK 部署的Elasticsearch。其作用是,供下面的fluentd configmap引用 -

配置文件路径改为

/fluentd/etc

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

# fluentd-es-configmap

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-es-config

namespace: elastic-system

data:

fluent.conf: |-

# https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/docker-image/v1.11/debian-elasticsearch7/conf/fluent.conf

@include "#{ENV['FLUENTD_SYSTEMD_CONF'] || 'systemd'}.conf"

@include "#{ENV['FLUENTD_PROMETHEUS_CONF'] || 'prometheus'}.conf"

@include kubernetes.conf

@include conf.d/*.conf

<match kubernetes.**>

# https://github.com/kubernetes/kubernetes/issues/23001

@type elasticsearch_dynamic

@id kubernetes_elasticsearch

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

logstash_prefix logstash-${record['kubernetes']['namespace_name']}

logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

target_index_key "#{ENV['FLUENT_ELASTICSEARCH_TARGET_INDEX_KEY'] || use_nil}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}"

template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}"

template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}"

template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}"

sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}"

request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}"

suppress_type_name "#{ENV['FLUENT_ELASTICSEARCH_SUPPRESS_TYPE_NAME'] || 'true'}"

enable_ilm "#{ENV['FLUENT_ELASTICSEARCH_ENABLE_ILM'] || 'false'}"

ilm_policy_id "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_ID'] || use_default}"

ilm_policy "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY'] || use_default}"

ilm_policy_overwrite "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_OVERWRITE'] || 'false'}"

<buffer>

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

<match **>

@type elasticsearch

@id out_es

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}"

logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

target_index_key "#{ENV['FLUENT_ELASTICSEARCH_TARGET_INDEX_KEY'] || use_nil}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}"

template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}"

template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}"

template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}"

sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}"

request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}"

suppress_type_name "#{ENV['FLUENT_ELASTICSEARCH_SUPPRESS_TYPE_NAME'] || 'true'}"

enable_ilm "#{ENV['FLUENT_ELASTICSEARCH_ENABLE_ILM'] || 'false'}"

ilm_policy_id "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_ID'] || use_default}"

ilm_policy "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY'] || use_default}"

ilm_policy_overwrite "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_OVERWRITE'] || 'false'}"

<buffer>

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

kubernetes.conf: |-

# https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/docker-image/v1.11/debian-elasticsearch7/conf/kubernetes.conf

<label @FLUENT_LOG>

<match fluent.**>

@type null

@id ignore_fluent_logs

</match>

</label>

<source>

@id fluentd-containers.log

@type tail

path /var/log/containers/*.log

pos_file /var/log/es-containers.log.pos

tag raw.kubernetes.*

read_from_head true

<parse>

@type multi_format

<pattern>

format json

time_key time

time_format %Y-%m-%dT%H:%M:%S.%NZ

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

# Detect exceptions in the log output and forward them as one log entry.

<match raw.kubernetes.**>

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

# Concatenate multi-line logs

<filter **>

@id filter_concat

@type concat

key message

multiline_end_regexp /\n$/

separator ""

</filter>

# Enriches records with Kubernetes metadata

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# Fixes json fields in Elasticsearch

<filter kubernetes.**>

@id filter_parser

@type parser

key_name log

reserve_data true

remove_key_name_field true

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none

</pattern>

</parse>

</filter>

<source>

@type tail

@id in_tail_minion

path /var/log/salt/minion

pos_file /var/log/fluentd-salt.pos

tag salt

<parse>

@type regexp

expression /^(?<time>[^ ]* [^ ,]*)[^\[]*\[[^\]]*\]\[(?<severity>[^ \]]*) *\] (?<message>.*)$/

time_format %Y-%m-%d %H:%M:%S

</parse>

</source>

<source>

@type tail

@id in_tail_startupscript

path /var/log/startupscript.log

pos_file /var/log/fluentd-startupscript.log.pos

tag startupscript

<parse>

@type syslog

</parse>

</source>

<source>

@type tail

@id in_tail_docker

path /var/log/docker.log

pos_file /var/log/fluentd-docker.log.pos

tag docker

<parse>

@type regexp

expression /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/

</parse>

</source>

<source>

@type tail

@id in_tail_etcd

path /var/log/etcd.log

pos_file /var/log/fluentd-etcd.log.pos

tag etcd

<parse>

@type none

</parse>

</source>

<source>

@type tail

@id in_tail_kubelet

multiline_flush_interval 5s

path /var/log/kubelet.log

pos_file /var/log/fluentd-kubelet.log.pos

tag kubelet

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_proxy

multiline_flush_interval 5s

path /var/log/kube-proxy.log

pos_file /var/log/fluentd-kube-proxy.log.pos

tag kube-proxy

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_apiserver

multiline_flush_interval 5s

path /var/log/kube-apiserver.log

pos_file /var/log/fluentd-kube-apiserver.log.pos

tag kube-apiserver

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_controller_manager

multiline_flush_interval 5s

path /var/log/kube-controller-manager.log

pos_file /var/log/fluentd-kube-controller-manager.log.pos

tag kube-controller-manager

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_kube_scheduler

multiline_flush_interval 5s

path /var/log/kube-scheduler.log

pos_file /var/log/fluentd-kube-scheduler.log.pos

tag kube-scheduler

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_rescheduler

multiline_flush_interval 5s

path /var/log/rescheduler.log

pos_file /var/log/fluentd-rescheduler.log.pos

tag rescheduler

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_glbc

multiline_flush_interval 5s

path /var/log/glbc.log

pos_file /var/log/fluentd-glbc.log.pos

tag glbc

<parse>

@type kubernetes

</parse>

</source>

<source>

@type tail

@id in_tail_cluster_autoscaler

multiline_flush_interval 5s

path /var/log/cluster-autoscaler.log

pos_file /var/log/fluentd-cluster-autoscaler.log.pos

tag cluster-autoscaler

<parse>

@type kubernetes

</parse>

</source>

# Example:

# 2017-02-09T00:15:57.992775796Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" ip="104.132.1.72" method="GET" user="kubecfg" as="<self>" asgroups="<lookup>" namespace="default" uri="/api/v1/namespaces/default/pods"

# 2017-02-09T00:15:57.993528822Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" response="200"

<source>

@type tail

@id in_tail_kube_apiserver_audit

multiline_flush_interval 5s

path /var/log/kubernetes/kube-apiserver-audit.log

pos_file /var/log/kube-apiserver-audit.log.pos

tag kube-apiserver-audit

<parse>

@type multiline

format_firstline /^\S+\s+AUDIT:/

# Fields must be explicitly captured by name to be parsed into the record.

# Fields may not always be present, and order may change, so this just looks

# for a list of key="\"quoted\" value" pairs separated by spaces.

# Unknown fields are ignored.

# Note: We can't separate query/response lines as format1/format2 because

# they don't always come one after the other for a given query.

format1 /^(?<time>\S+) AUDIT:(?: (?:id="(?<id>(?:[^"\\]|\\.)*)"|ip="(?<ip>(?:[^"\\]|\\.)*)"|method="(?<method>(?:[^"\\]|\\.)*)"|user="(?<user>(?:[^"\\]|\\.)*)"|groups="(?<groups>(?:[^"\\]|\\.)*)"|as="(?<as>(?:[^"\\]|\\.)*)"|asgroups="(?<asgroups>(?:[^"\\]|\\.)*)"|namespace="(?<namespace>(?:[^"\\]|\\.)*)"|uri="(?<uri>(?:[^"\\]|\\.)*)"|response="(?<response>(?:[^"\\]|\\.)*)"|\w+="(?:[^"\\]|\\.)*"))*/

time_format %Y-%m-%dT%T.%L%Z

</parse>

</source>

与之前部署不一样的地方

-

配置文件改为

fluent.conf,详细的参数设置,可以参考 https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/docker-image/v1.11/debian-elasticsearch7/conf/fluent.conf 和 https://docs.fluentd.org/configuration。 -

output 的配置移动到

fluent.conf中。并在<match kubernetes.**>中通过环境变量,配置成 ECK 部署的Elasticsearch。

部署 Fluentd

1

2

3

❯ kubectl apply -f fluentd-es-configmap.yaml

❯ kubectl apply -f fluentd-es-ds.yaml

部署完毕后,可查看 elastic-system 命名空间下已经部署了 fluentd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

❯ kubectl get all -n elastic-system

NAME READY STATUS RESTARTS AGE

pod/elastic-es-default-0 1/1 Running 0 10d

pod/elastic-es-default-1 1/1 Running 0 10d

pod/elastic-es-default-2 1/1 Running 0 10d

pod/elastic-operator-0 1/1 Running 1 10d

pod/fluentd-es-lrmqt 1/1 Running 0 4d6h

pod/fluentd-es-rd6xz 1/1 Running 0 4d6h

pod/fluentd-es-spq54 1/1 Running 0 4d6h

pod/fluentd-es-xc6pv 1/1 Running 0 4d6h

pod/kibana-kb-5bcd9f45dc-hzc9s 1/1 Running 0 10d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-es-default ClusterIP None <none> 9200/TCP 10d

service/elastic-es-http ClusterIP 172.23.4.246 <none> 9200/TCP 10d

service/elastic-es-transport ClusterIP None <none> 9300/TCP 10d

service/elastic-webhook-server ClusterIP 172.23.8.16 <none> 443/TCP 10d

service/kibana-kb-http ClusterIP 172.23.7.101 <none> 5601/TCP 10d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/fluentd-es 4 4 4 4 4 <none> 4d6h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kibana-kb 1/1 1 1 10d

NAME DESIRED CURRENT READY AGE

replicaset.apps/kibana-kb-5bcd9f45dc 1 1 1 10d

NAME READY AGE

statefulset.apps/elastic-es-default 3/3 10d

statefulset.apps/elastic-operator 1/1 10d

ILM

得益于 ECK 部署,新版本的 Elasticsearch 已经支持 ILM。

我们可以利用 ILM 轻松配置自动删除日志策略。

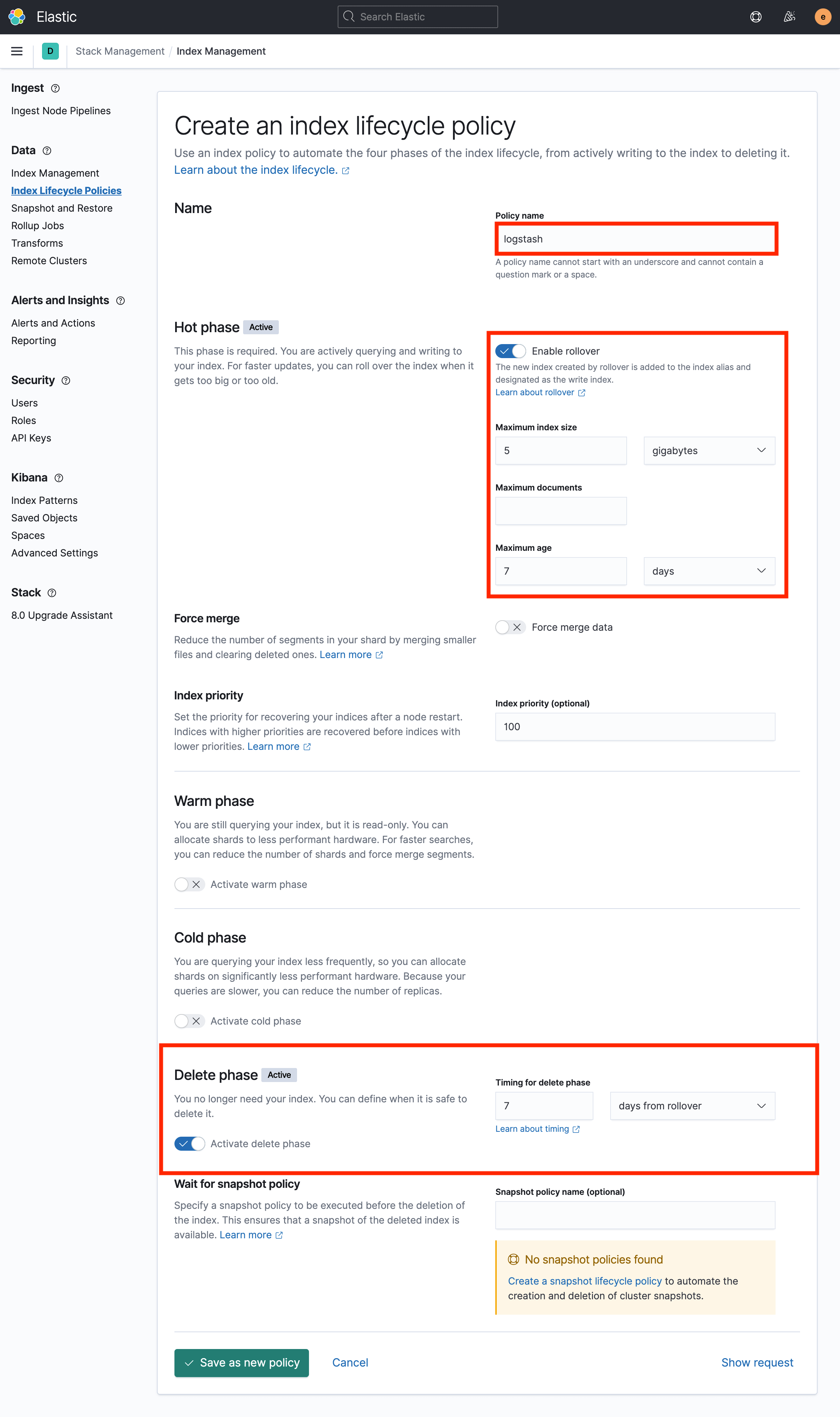

创建生命周期策略

进入管理面板

创建

配置删除 7 天之前的日志

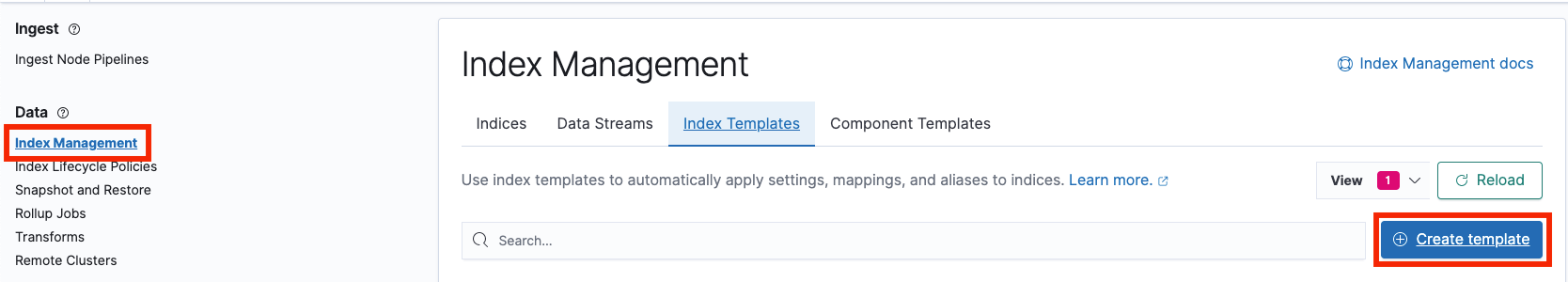

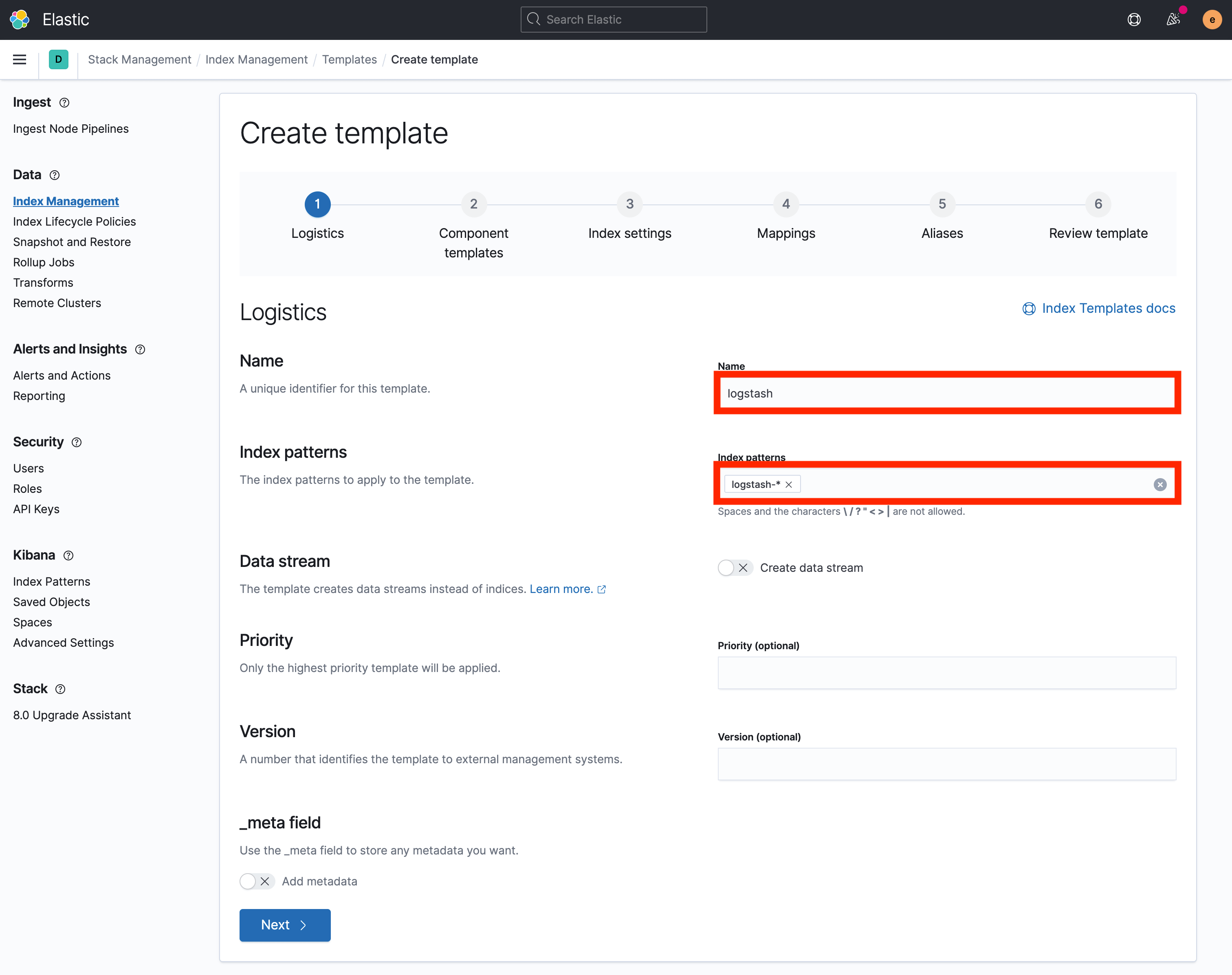

创建 index 模板

创建

配置参数

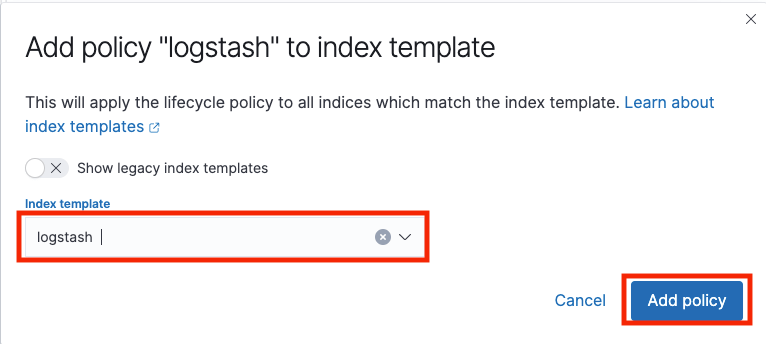

将模板加入策略

CronJob

除了利用 ILM 实现自动删除旧日志外,还可以利用 CronJob 和 DELETE API 实现。

部署文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# index-cleaner.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: index-cleaner

namespace: elastic-system

spec:

schedule: "0 0 * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: index-cleaner

env:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

key: elastic

name: elastic-es-elastic-user

image: makeoptim/es-index-cleaner

command: ["/bin/sh", "-c"]

args:

[

"curl -v --insecure -u elastic:$ELASTIC_PASSWORD -XDELETE https://elastic-es-http:9200/logstash-*`date -d'7 days ago' +'%Y.%m.%d'`",

]

restartPolicy: Never

backoffLimit: 2

注:

- 镜像

makeoptim/es-index-cleaner源代码详见 https://github.com/MakeOptim/es-index-cleaner - 利用

date -d'7 days ago' +'%Y.%m.%d'获取7天前的日期,这里可以根据自身情况修改天数 --insecure是因为 ECK 部署使用自签名证书-u elastic:$ELASTIC_PASSWORD表示使用该账号授权

部署

执行以下命令,部署自动清理旧日志定时任务。

1

❯ kubectl apply -f index-cleaner.yaml

验证

部署完毕后,会定时执行,过几天后可查询任务列表

1

2

3

4

5

❯ kubectl get job -n elastic-system

NAME COMPLETIONS DURATION AGE

es-index-cleaner-1609603200 1/1 42s 2d10h

es-index-cleaner-1609689600 1/1 7s 34h

es-index-cleaner-1609776000 1/1 58s 10h

1

2

3

4

5

6

❯ kubectl describe job es-index-cleaner-1609776000 -n elastic-system

Name: es-index-cleaner-1609776000

Namespace: elastic-system

......

Pods Statuses: 0 Running / 1 Succeeded / 0 Failed

......

1

2

3

4

❯ kubectl get pod -n elastic-system | grep es-index

es-index-cleaner-1609603200-tjtvm 0/1 Completed 0 2d10h

es-index-cleaner-1609689600-vkr9g 0/1 Completed 0 34h

es-index-cleaner-1609776000-7zkd5 0/1 Completed 0 10h

1

2

3

4

5

6

7

8

9

10

❯ kubectl logs es-index-cleaner-1609776000-7zkd5 -n elastic-system

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0* Trying 172.23.4.246:9200...

* Connected to elastic-es-http (172.23.4.246) port 9200 (#0)

......

{ [21 bytes data]

100 21 100 21 0 0 840 0 --:--:-- --:--:-- --:--:-- 840

* Connection #0 to host elastic-es-http left intact

{"acknowledged":true}%

可以看到任务正确执行,并且接口返回 {"acknowledged":true} 表示成功。

小结

本篇文章,主要在 EFK 日志收集 的基础上,利用 ECK、 fluentd-kubernetes-daemonset、ILM、CronJob 将日志收集优化达到高可用状态,满足生产环境的要求。

Istio 常见问题 - no matches for kind MonitoringDashboard in version monitoring.kiali.io/v1alpha1

Istio 常见问题 - no matches for kind MonitoringDashboard in version monitoring.kiali.io/v1alpha1